4 сommon mobile A/B testing mistakes that ruin your data

Experienced product managers know that every change in a product needs to be backed by data. A successful change can boost performance through the roof: companies have seen as much as a 400% growth in conversions from only slight changes in UX design.

Unfortunately, that means that every wrong decision comes at a high price.

The easiest and fastest way to test something is through A/B testing. However, a poorly designed A/B test can impact the success of your rollout just as badly as a faulty feature. Let’s see some of the common mistakes in A/B test designs and how to avoid them.

What’s A/B testing?

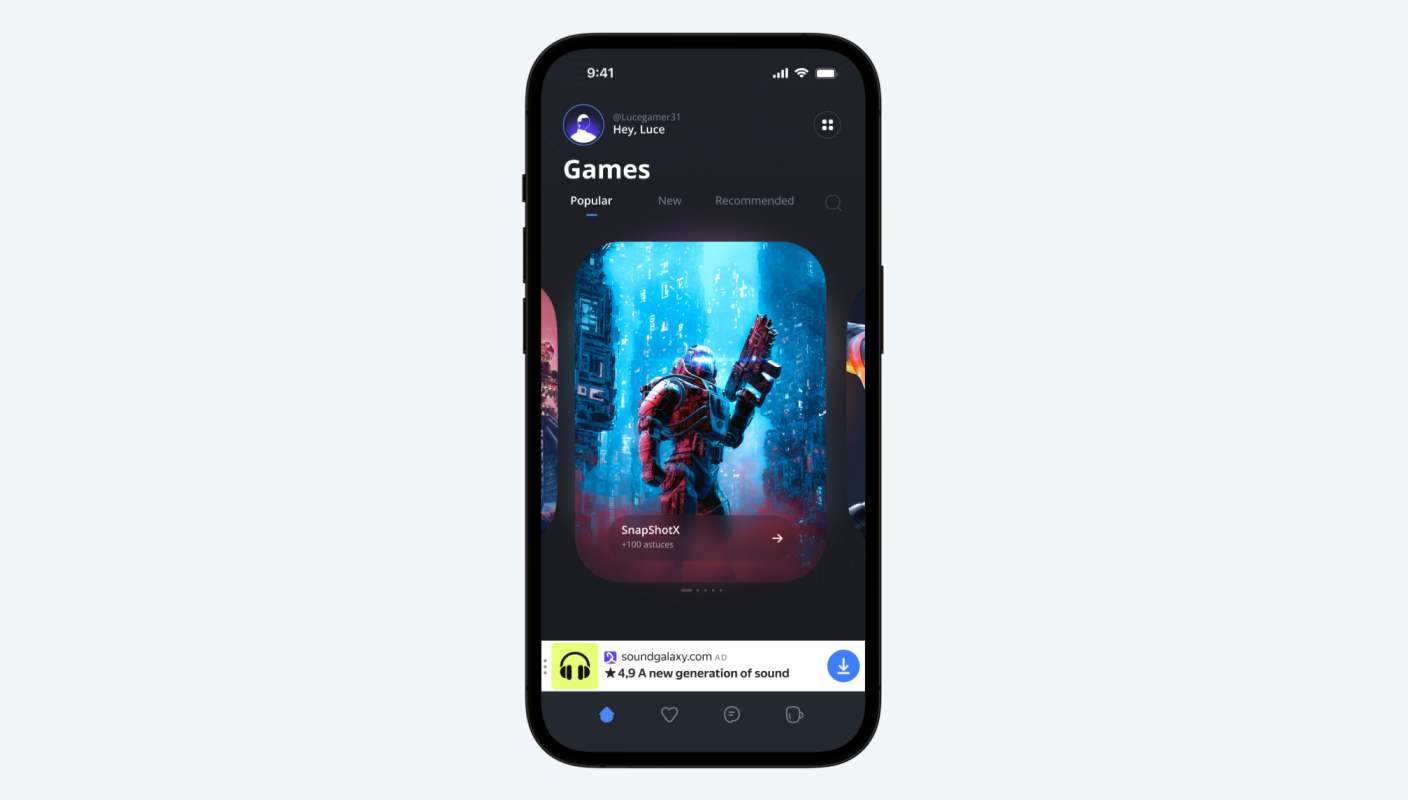

A/B testing is a popular tool that allows you to quickly test a hypothesis about experiences in your mobile app by comparing the users’ engagement with two versions of a feature. You can run A/B tests on any app element or feature, from button copy to full-scale user flows.

The method is effective, but it may be tricky. With the right tools, you can quickly set up an A/B test and then implement data-backed changes soon after.

Ways to run an A/B test on a mobile app

The structure of a well-designed A/B test is similar to a good old scientific experiment. It includes:

- A question

- An if-then hypothesis

- Variables you’ll be testing (app features, copy, design, etc.)

- Ways to measure your results

- A testing tool and/or software

- Results with raw data

- Data analysis and interpretation

You can set up an A/B test manually by rolling out a feature or element, updating your app version, and observing the users’ behavior. However, the risks of manual A/B testing are high: aside from lots of manual work and data collection, you’ll be running the risk of messing up what already works.

Another option is to use a marketing and analytics tool that’s intuitive and ready-made for A/B testing. For example, Yango App Analytics allows you to launch and manage A/B experiments yourself and then configure your app directly from the App Analytics account without having to release a new app version in the App store.

Common mistakes in mobile app A/B tests

Though A/B tests are simple to run in theory, there are a few elements in the test design that are easy to miss if you’re working with a complicated feature or just haven’t had practice in a while.

- Not having a clear hypothesis

It may be tempting to run an experiment based on a gut feeling that something is going to work better, but a scientific experiment like an A/B test can only answer a closed-ended question with a limited number of options.

Most commonly, your data will either support or refute your hypothesis. This means your hypothesis should be as specific as possible. It must include the variables you will be testing and the metrics you will want to look at.

For example: “I hypothesize that if [we add a “secure payment” sign next to the credit card field] then [more users will convert].” - Testing too many variables at once

Though it may seem like an obvious way to save resources, testing too many variables and hypotheses at once is risky business.

Suppose you want to boost user engagement on a product screen. You switch up the visuals, add a tutorial, and make the “buy now” button bigger. Maybe that works! But how will you know which change is making a difference? Welcome back to square one, where you’ll have to test one variable at a time to find out the answer. - Forgetting to test your A/B tool before the experiment

Let’s not imagine the tragedy of your A/B testing breaking your actual app! Before launching your experiment, conduct an A/A test by running your software in the background without any changes so you can make sure everything is working properly. An A/A test will allow you to:

— Identify the confidence intervals in your test

— Make sure the tool has been set up correctly

— Eliminate any technical issues in the future

There are analytics tools that don’t require advanced coding skills. Consider using an all-in-one solution with a built-in A/B testing feature that minimizes the risks and covers built-in features like Flag configuration to easily implement the changes based on test results. - Overlooking the business results

It’s easy to lose sight of long-term goals in a rush to meet quarterly KPIs. Vanity metrics like conversion rates on product screens are an easy target for A/B testing, but it’s also important to consider how adding that huge “BUY NOW” button will impact your brand and customer satisfaction in the long run.

The cost of rolling out a faulty feature on a mobile app is high. To minimize the risks, product managers can run quick A/B experiments to test hypotheses and optimize their apps based on real user data.